Boldium hosted another excellent forum on AI this week, this time emphasizing how machine learning integrates with visual design. Nick Foster offered a fascinating metaphor for AI—that of an overdriven amplifier pushing the input into fuzzy distortion. His opening slide presented Black Flag on stage. I could hear Greg Ginn’s crackling plexiglass Ampeg guitar twisting out a chromatic swirl of notes in the same way the the DALL-E 3 tears away curtains before a window into a disturbing nightmare realm.

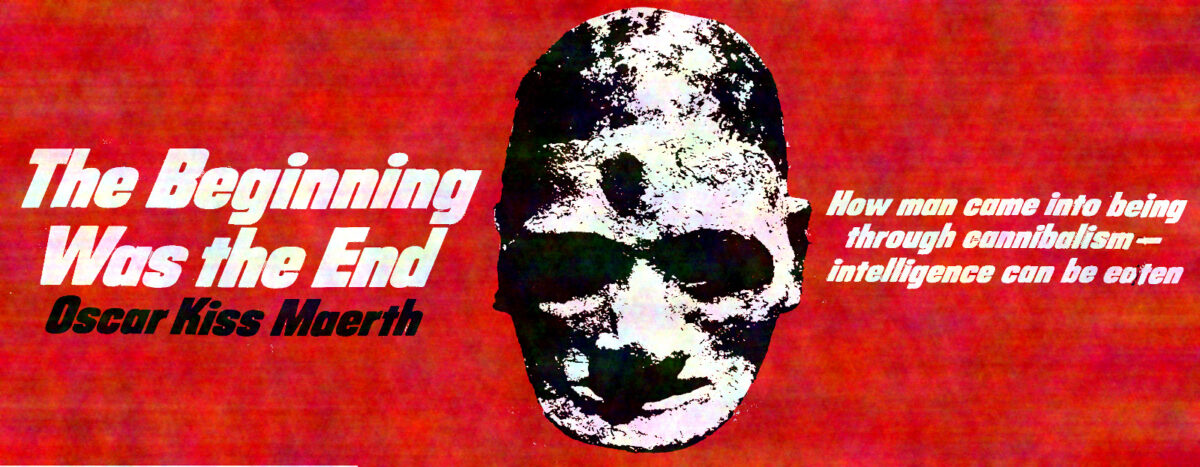

I started thinking about how these models are produced by consuming vast volumes of information filtered through the expedience of what’s available on the Internet. Out of this cauldron of goo come offerings. They are a momentary consensus of the ghosts in the machine. Shout your command, “Hearty stew with root vegetables, beans and mutton”, and out of the miasma come four attempts to comply. The first spoonful is too hot. The second bite includes coffee beans. The third includes a miniature sheep. Probably one of the four is close, but you’ll spice it up anyway by tweaking the prompt.

The ghosts produce this consensus and seem to ask you to make the final decision. It reminds me of Howard Roark’s trial where he talks about there being no collective brain.

There is no such thing as a collective brain. There is no such thing as a collective thought. An agreement reached by a group of men is only a compromise or an average drawn upon many individual thoughts. It is a secondary consequence. The primary act—the process of reason—must be performed by each man alone. We can divide a meal among many men. We cannot digest it in a collective stomach. No man can use his lungs to breathe for another man. No man can use his brain to think for another. All the functions of body and spirit are private. They cannot be shared or transferred.

The Fountainhead, Ayn Rand

These AI models are the closest approximations of a collective brain yet produced. What they produce is often incoherent, even marked by insanity. The results can be valuable in the context of an individual putting in the effort to rationalize them. Aside from the creator applying craft to the generated product, the spectator draws from the context to make sense of the experience. Yesterday, I enjoyed an AI cover of Paul McCartney singing Take On Me. (The vocals from an acoustic a-ha performance are replaced with a McCartney voice model). Being prompted with the suggestion that the voice was the famous Beatle lends power to the illusion. And when you hear it, you might remark, “wow, that’s crazy.”

Consider the source of the data used by the models: the Internet at large. It’s a noisy, obnoxious place. You’ve blocked plenty of jerks from your social media feeds, and you don’t bother reading annoying blogs, but the information is still out there. It was all scraped off and stuffed into one vat of slop from which we randomly pluck chunks.

It’s often ugly or disturbing, similar to looking into a mirror or riding BART. The full spectrum of all the ideas expressed on the public Web, both good and evil, are projected outward, and if you don’t angle the prism just so, you get a glimpse into a world of horror. There may be a few simple precautions in place, like being handed dark glasses during an eclipse, but staring directly into the sun is always a choice. It can be painful.

In reaction, the censors emerge to better affix the protections. Naturally, the vendors do not wish to be selling certain unacceptable ideas, even if they are user-generated. Microsoft cannot afford to be the source of Mickey Mouse depicted performing off-brand acts such as flying a plane into New York City.

In my favorite hobby, roleplaying games, I often use random tables, as is traditional. These tables combine to produce multi-part constructs. The classic use is Appendix A of the Dungeon Masters Guide that generates dungeon maps. The text states upfront that the model, comprised of more than twenty lookup tables, can produce unwanted results that the user can discard or modify.

Discretion must prevail at all times. For example: if you have decided that a level is to be but one sheet of paper in size, and the die result calls for something which goes beyond an edge, amend the result by rolling until you obtain something which will fit with your predetermined limits. Common sense will serve. If a room won’t fit, a smaller one must serve, and any room or chamber which is called for can be otherwise drawn to suit what you believe to be its best positioning.

Dungeon Masters Guide, Appendix A, Gary Gygax

This process is the low-fi equivalent of prompt engineering and post-production work done on AI images. I use tables to generate the contents of rooms, and the results sometimes present a puzzle. Why are giant beetles guarding glass jars filled with tree bark? I can invent an explanation, perhaps adding clues, such as a diary kept by a druid taking samples from trees. Or I can let the mystery hang there for the players to sort out. That’s when the game can be surprising and delightful as the players invent explanations I could not expect.

In a larger scope, the entire RPG campaign is an exercise in consensus world building. The game rules provide some structure and imply a world. If we’re playing Dungeons & Dragons, we know we’re in a world where gold coins are money, and brave adventures go off to find dungeons in hopes of finding gold and growing more powerful. As the game master, I add even more structure. I can declare only humans can be clerics. I can give an XP bonus to dwarf characters who hoard their gold. The other players build the world with their choices as well.

Nothing in the implied world or in anything I planned anticipated the players spending a lot of time knocking monsters unconscious and bring them back to town. The game does have rules for subduing enemies. From that kernel, the players in my longterm campaign invented the enterprise of capturing wild animals and monsters to sell in town. They relish the idea of running across wandering giant animals to place in cages.

The players behaved as if there were a market for giant animals. I didn’t dismiss the idea, so it became part of the game world. If everyone else around the table had declared it a rotten idea, maybe we’d have discarded it. It led to more interesting events. After the exhibition of a giant python in the town square, everyone knew the adventurers had been into the swamp where trespass within is forbidden by decree. That created an interesting interaction with the town sheriff.

The experience of the game is an evolving consensus that produces entertainment. It’s an average of the expectations of the players and the game authors. The game rules are mostly static, of course. The players add new ideas consistently. The game pleases everyone.

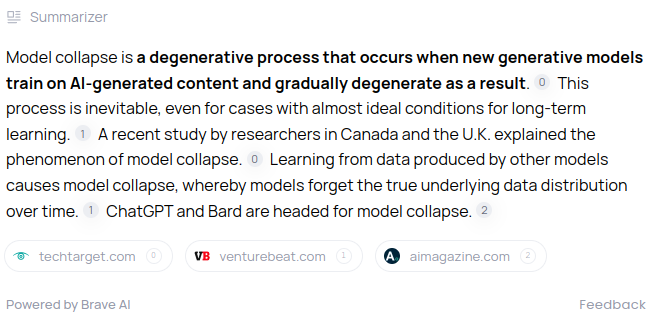

Now consider injecting the entire consensus of the Internet into the game world. Google Bard says it can take “several thousand” tokens as part of a prompt. It can’t track everything that happens in one game session, much less the entire chronicle of the game going back years. It can draw upon the generic information pulled from the Internet, and it might know something about the Basic Fantasy RPG campaign I’ve run since 2017 and relentlessly document on EmptyZ. When I asked it, “Who are Tienarth’s Raiders?” It guessed that I was talking about Dungeons & Dragons and then made up everything else.

Tienarth’s Raiders are a fictional mercenary group in the Dungeons & Dragons campaign setting of Forgotten Realms. They are led by the half-orc Tienarth, a skilled tactician and warrior. The group is known for their ruthlessness and efficiency, and they are often hired by wealthy patrons to undertake dangerous or difficult missions.

“Who are Tienarth’s Raiders?”, Google Bard

Tienarth is an elf magic-user, the game is BFRPG, and the Raiders don’t work for wealthy patrons. Almost everything in the response was generic tabletop roleplaying game dreck. It’s as if all the competing thoughts about a band of adventurers canceled out to equal nothing. Maybe it’s like that Harry Nilsson line from The Point, “A point in every direction is the same as having no point at all.”

I’d almost rather the model told me Tienarth’s Raiders are a type of cheesecake made from radioactive stardust, not something that seems sensible but is completely wrong. It asks too much of the public, generic models to provide anything meaningful to the personal game world built by a small group of friends. A model fine-tuned on issues of Dragon Magazine and White Dwarf could be interesting, though.

I wonder how long it will be until we can check off boxes of data from different subcultures (e.g. mix in Dragonsfoot, exclude The Forge) to fine-tune the models on demand.